Flux models have revolutionized AI-powered creativity. In our previous article, we explored the business potential of Flux. Now, we're diving deeper into the technical side.

This guide will walk you through:

- The different versions of Flux

- How Flux compares with Stable Diffusion

- The process of fine-tuning Flux using Replicate

- Flux prompt engineering tips

- Cost of fine-tuning Flux and hardware requirements

Whether you're a curious beginner or an experienced developer, you'll gain valuable insights into customizing Flux models for your specific needs.

Ready to unlock the full potential of Flux? Let's get started!

Flux.1 – The New Era of Image Generation

Flux models proposed by Black Forest Labs, a new research lab founded the original creators of the Stable Diffusion models for image and video generation represent a cutting-edge approach in the field of generative AI, specifically designed for image generation tasks.

The models are built on the diffusion transformer (DiT) architecture, which allows models with a high number of parameters to maintain efficiency. The Flux models are trained on 12 billion parameters for high-quality image generation.

Flux has three versions:

1. Flux.1 [schnell]:

Flux.1 [schnell] is optimised for fast, local development and personal use. This version is designed to run efficiently on high-performance laptops, providing rapid image generation without the need for cloud resources.

2. Flux.1 [dev]:

Flux.1 [dev] is an open-weight model intended for non-commercial applications. It allows developers and hobbyists to explore the full potential of AI image generation while being adaptable for various projects.

3. Flux.1 [pro]:

Flux.1 [pro] is the top-tier version, designed for professional, commercial use. It offers the highest performance and is suitable for businesses looking to incorporate generative AI image services into their offerings. Companies are already adopting the Pro version for its robust capabilities and high-quality outputs.

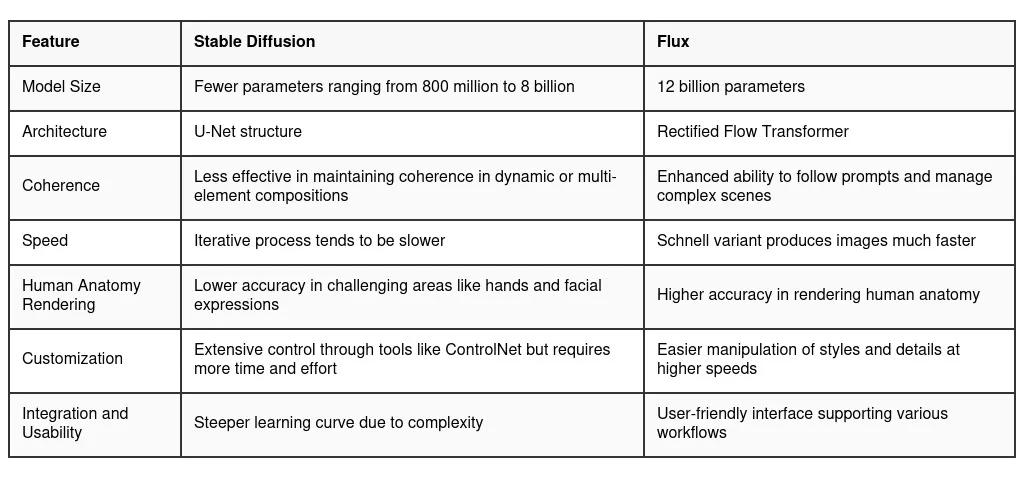

Flux vs Stable Diffusion

Source: Medium Article

Flux models represent a significant improvement over Stable Diffusion XL, the team’s last major release under Stability

- Image Quality and Prompt Adherence: Flux is noted for its superior image quality and prompt adherence compared to Stable Diffusion 3. It is considered on par with Midjourney V6, making it a strong competitor in the AI image generation space.

- Speed and Efficiency: The model offers rapid image generation capabilities, with different versions tailored for various needs. For instance, Flux Schnell generates images about 10 times faster than the Pro model, although at a slightly reduced quality

- Parameter Size: With 12 billion parameters, Flux is significantly larger than previous models like Stable Diffusion XL, which had around 3.5 billion parameters. This increase in parameters contributes to its enhanced performance and capabilities

Now, let's dive into some exciting real-world comparisons between Stable Diffusion and Flux! We'll focus on challenging scenarios where AI models have traditionally struggled, giving you a side-by-side look at how these two powerhouses perform. Get ready to be amazed as we put these models to the test in areas like human anatomy, text rendering, and complex scene generation. These comparisons will not only showcase the capabilities of each model but also highlight the incredible progress in AI-generated imagery.

Comparison 1 - Generating human anatomy like fingers correctly

Prompt

1A 1st person view of a man holding a paintbrush over a canvas.

2The canvas has a drawing of an unfinished horse in a garden.

3His other hand over the paint colors.Stable Diffusion - Hands and fingers generated incorrectly ❌

FLUX.1 ✅

Our take

FLUX.1 is a couple of steps ahead, while Stable Diffusion 3 notoriously struggles with fingers, as expected.

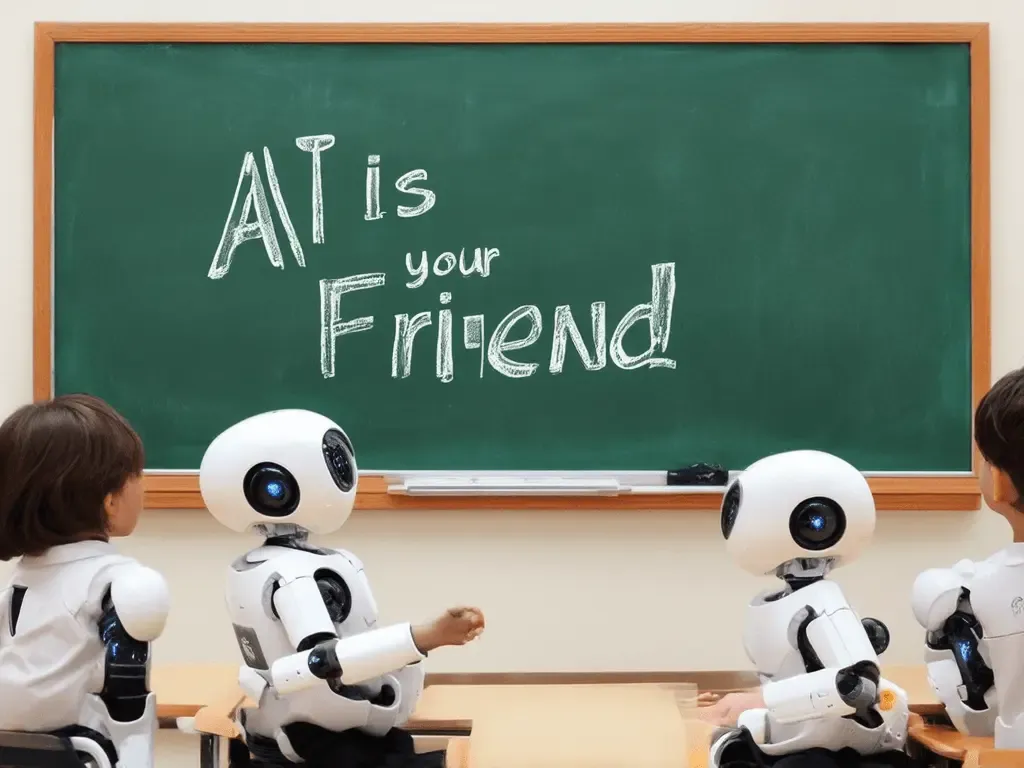

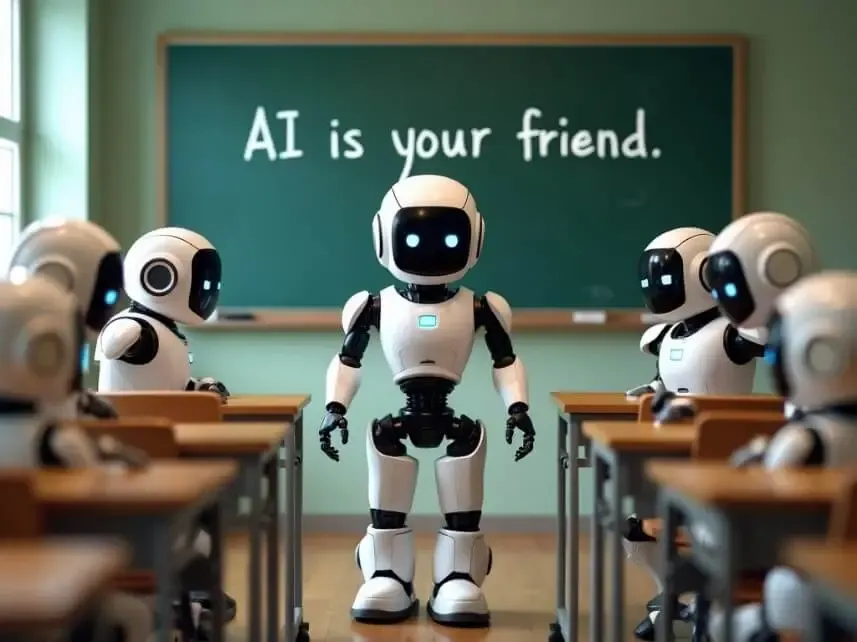

Comparison 2 - Generating images with text perfectly

Prompt

1Create a classroom of young robots.

2The chalkboard in the classroom has 'AI Is Your Friend' written on it.Stable Diffusion 3 - Typo in friend ❌

FLUX.1 ✅

Our Take

FLUX.1 just looks right. Stable Diffusion, on the other hand - even with many generation attempts fails to deliver.

Comparison 3 - Generating images with details

Prompt

1A misty forest landscape with Snow White and her seven

2dwarves walking along a carpet of lilies at

3sunset with enchanting fireflies drawn in cartoon style.

4A river is flowing in the background,

5and tress have 5 apples on them in total.Stable Diffusion ❌

FLUX.1 ✅

Our Take

Stable Diffusion generated something powerful, but in all the wrong ways. The unsettling mix of gnomes with snow white was revealed each generation, and usually the apples were missing.

Based on these comparisons, it's clear that Flux emerges as the winner across all three scenarios. Its superior performance in generating accurate human anatomy, rendering text perfectly, and creating detailed, complex scenes demonstrates its advanced capabilities over Stable Diffusion.

With Flux's impressive results in mind, you might be wondering how to harness this power for your own projects. The good news is that you can! In the next section, we'll dive into the exciting process of fine-tuning Flux using Replicate.com. This practical guide will walk you through the steps to customize Flux for your specific needs, allowing you to tap into its full potential.

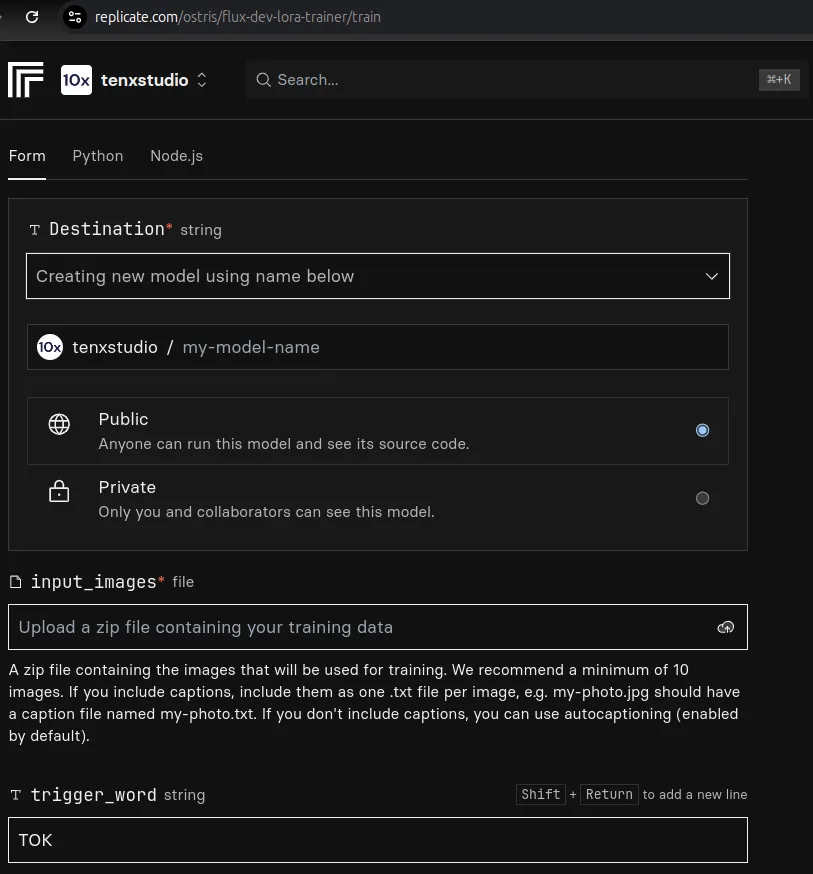

Fine-tuning Flux Using Replicate

Embarking on the adventure of fine-tuning Flux models? Let's break down this exciting process into a comprehensive visual journey:

(For now it’s only possible to fine-tune Flux-dev and Flux-shnell)

1. Prepare Your Data:

- Collect 12-20 high-quality images

- Ensure diversity in settings, poses, and lighting

- Use large images in JPEG or PNG formats

2. Set Up Environment:

- Install necessary dependencies (Python, PyTorch, Replicate API)

- Configure your API keys and authentication

3. Preprocess Images:

- Resize images to a consistent dimension

- Augment data if necessary (e.g., rotations, flips)

- save it in a compressed file (.zip)

4. Select Flux Model: You can create models under your own personal account or in an organization if you want to share access with your team or other collaborators

5. Upload your images - A .zip file

6. Choose your trigger_word: A unique string of characters like TOK that is not a word or phrase in any language. See the last Flux fine-tune guide on faces for more details about how to choose a good trigger word.

7. Configure Parameters:

- Set learning rate, batch size, and number of epochs

- Choose an appropriate optimizer (e.g., Adam)

8. Start training, Monitor and Validate:

- Track loss and performance metrics during training

- Generate sample images periodically to assess progress

9. Save and Export:

- Save model checkpoints

- Export the final model for deployment

10. Train again if needed: If you find that your first fine-tuned result is not producing exactly what you want, try the training process again with a higher number of steps, or with high quality images, or more images. There’s no need to create a new model each time: you can keep using your existing model as the desination, and each new completed training will push to it as a new version.

If you want to launch your training via API, you can follow those steps:

1. Install Replicate’s Python client library

1!pip install replicate2. Set the REPLICATE_API_TOKEN environment variable

1export REPLICATE_API_TOKEN=r8_Hv2**********************************This is your Default API token. Keep it to yourself.

3. Import the client in your Python file

1import replicate4. Train your selected model using Replicate’s API: (flux-dev or flux-shnell)

1training = replicate.trainings.create(

2 # You need to create a model on Replicate that will be the destination for the trained version.

3 destination="tenxstudio/model-name"

4 version="your_selected_pre-trained_model_version",

5 input={

6 "steps": 1000,

7 "lora_rank": 16,

8 "optimizer": "adamw8bit",

9 "batch_size": 1,

10 "resolution": "512,768,1024",

11 "autocaption": True,

12 "input_images": "path_to_your_zip_file",

13 "trigger_word": "TOK",

14 "learning_rate": 0.0004,

15 "wandb_project": "flux_train_replicate",

16 "wandb_save_interval": 100,

17 "caption_dropout_rate": 0.05,

18 "cache_latents_to_disk": False,

19 "wandb_sample_interval": 100

20 },

21)Once trained you can export model’s weighs.

Showcase Before and After

One of the most exciting aspects of fine-tuning a Flux model is seeing the results. Let's take a look at some original photos used for fine-tuning and compare them with images generated by our fine-tuned model. This comparison will help us understand the improvements and unique features that our model has developed.

Image generated with Flux.1 [dev]

Prompt

1Manel, a confident 28-year-old woman, presenting at a tech conference. She's wearing a stylish black hijab and a professional black blazer.Result

The fine-tuned model has successfully captured my facial features and hijab style while adapting to a new, professional context. It maintains my identity and cultural attire while showcasing me in a business setting, demonstrating the model's ability to generalize and combine learned features with new scenarios.

Prompt Engineering for Optimal Results

Crafting effective prompts is crucial for getting the best results from your fine-tuned Flux model. The right prompt can make the difference between a mediocre output and a stunning, accurate representation of your subject.

Crafting effective prompts is crucial for getting the best results from your fine-tuned Flux model. The right prompt can make the difference between a mediocre output and a stunning, accurate representation of your subject.

The Importance of Effective Prompts

- Prompts guide the model's creative process

- Well-crafted prompts can enhance accuracy and relevance

- Prompts help maintain consistency with the subject's identity and characteristics

Tips for Crafting Prompts

- Be specific about identity markers (e.g., age, cultural attire)

- Include context and setting details

- Specify desired emotional expressions or poses

- Mention key physical characteristics to maintain consistency

- Experiment with different levels of detail to find the right balance

And you can get help of an LLM to write for you strong prompts

Cost Estimation and Hardware Requirements

When it comes to fine-tuning Flux models, understanding the associated costs and hardware requirements is crucial for planning your projects effectively. Let's break down the key factors:

Training Costs

Fine-tuning Flux models on Replicate operates on a pay-as-you-go basis, with billing calculated per second of compute time. Here's what you need to know:

- Hardware: Trainings run on Nvidia H100 GPUs, offering top-tier performance for AI workloads.

- Cost Rate: As of our latest information, the cost is $0.001528 per second of compute time.

- Typical Scenario: For a standard fine-tuning session using about 20 training images and 1000 steps, you can expect:

- Duration: Approximately 20 minutes

- Estimated Cost: Around $1.85 USD

- Scalability: Costs scale linearly with training time. Larger datasets or more training steps will increase the duration and, consequently, the cost.

Post-Training Usage

Once your model is fine-tuned, using it is cost-effective:

- You're only billed for the actual compute time used to generate images.

- This makes running inferences with your fine-tuned model economical for various application scales.

Hardware Considerations

While Replicate handles the hardware for training, it's worth understanding the implications:

- Cloud-Based Solution: You don't need to invest in expensive GPU hardware upfront.

- Scalability: The cloud infrastructure allows for easy scaling if you need to run multiple training sessions or larger models.

- Local Testing: For development and testing, a moderate-spec machine is sufficient, as the heavy lifting happens on Replicate's servers.

Cost Management Tips

- Optimize Your Dataset: A well-prepared, focused dataset can reduce necessary training time.

- Monitor Training Progress: Keep an eye on training metrics to avoid unnecessary extended runs.

- Start Small: Begin with shorter training sessions to test your setup before committing to longer, more expensive runs.

- Leverage Checkpoints: Use training checkpoints to resume training instead of starting from scratch if you need more training time.

By understanding these cost and hardware factors, you can plan your Flux fine-tuning projects more effectively, balancing your budget with your AI development needs.

Conclusion

As we conclude our exploration of Flux models and the art of fine-tuning, we've uncovered the potential of this innovative technology. From its unique architecture that combines the strengths of diffusion and flow-based models, to the practical steps of fine-tuning on Replicate, we've seen how Flux can be tailored to specific creative tasks. We've also delved into the crucial role of prompt engineering and the practical considerations of costs and hardware requirements, providing a comprehensive view of what it takes to harness the full power of Flux.

The future of AI-generated imagery is evolving rapidly, with Flux at the forefront of this revolution. As you embark on your journey with fine-tuned Flux models, remember that every great AI application starts with curiosity and experimentation. Whether you're an artist, developer, or researcher, the possibilities are boundless. So, fire up your GPUs, craft your prompts, and start fine-tuning. The next breakthrough in AI-generated imagery could be just a few epochs away. Happy fine-tuning, and may your Flux models generate wonders beyond imagination!